Background

We recently put together a tender response that included a detailed look at our work with continuous integration and continuous development for the Single Digital Presence (SDP). This detailed analysis was written by Salsa's Alex Skrypnyk and we thought it would make a great blog...

Our work with the Victorian Department of Premier and Cabinet (DPC) on the SDP project involved a large project team working across different organisations (Salsa and DPC) and across different time zones. This required additional attention to keep the feedback loop as close as possible, especially within development and quality assurance teams.

An automated testing and deployment continuous integration (CI) pipeline was set up to standardise project development workflows, decrease regressions and reduce costs of manual, error-prone deployments.

This was set up because the faster we could validate features on a production-like environment, the faster we could pass (or fail), the faster we could mitigate, and the faster we could innovate.

Introduction to CI/CD

Wikipedia defines continuous integration as “the practice of merging all developer working copies to a shared mainline several times a day.” It involves integrating early and often to avoid the pitfalls of "integration hell". The practice aims to reduce rework and thus reduce costs and save time.

CI usually runs on a separate non-production server and has a set of checks that must be passed before changes are merged into a mainline. In open-source projects, the merging part is usually manually performed by a developer, and CI plays a role of the status check along with the result of the manual code change review. Once status checks indicate that a newly introduced change is stable, a developer can decide when to merge the code.

Some organisations prefer to streamline the change delivery process and have every single change get automatically deployed to the production environment. This requires adding the deployment step to the CI pipeline, which converts it into the continuous deployment (CD) pipeline.

Both CI and CD are part of the larger approach of change delivery workflow in software engineering — continuous delivery. The approach helps reduce the cost, time, and risk of delivering changes by allowing for more incremental updates to applications in production.

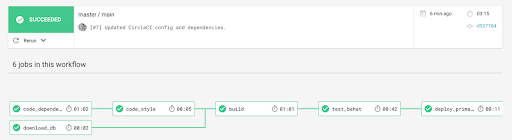

Example of generic CI/CD pipeline configuration in CircleCI cloud service with three phases: initialisation, testing and deployment.

Initialisation

CI/CD pipeline configuration is expected to provide a well-defined and consistent environment for each repeated run. But it’s worth nothing if the configuration is not close to the production servers — the builds may be passing on CI/CD, but cause unexpected behaviour in production. To avoid this, setting up CI/CD configuration needs to be performed by a highly skilled DevOps engineer, who is proficient enough to assess the current production environment configuration and replicate it in code for the CI/CD pipeline.

Fortunately, the latest advancements in containerisation technology allow developers to use the same configuration for local, CI/CD and production environments. This also leads to lower costs for the initial CI/CD pipeline setup of each project.

Another step in the initialisation phase is asset synchronisation: databases and files may be required to run tests and deployments. This step can usually be run in parallel to environment provisioning and may use local caching, allowing time savings during future deployments.

Good CI/CD platforms support parallelisation and caching, speeding up build processes and reducing feedback time.

Testing

Projects with multiple developers usually require a collaborative and sustainable process. Before the CI/CD era, merging code coming from several developers required a significant amount of time for manual, error-prone actions that still wouldn’t guarantee that everything was accounted for in the end result. A CI/CD pipeline, on the other hand, guarantees an automated, repeatable deployment process without any human interaction. This means that providing a list of repeatable checks and relying on those checks will make sure that the result is defined and consistent.

For example, some developers prefer a specific coding style. It is good practice for developers to agree on a specific standard and enforce it on the project. But enforcing verbally is not enough as developers may simply forget to check that a standard is being followed. A solution is to use code linters — tools that have the rules programmed to assess the code base and report anything that does not adhere to defined standards. And the CI/CD pipeline is a place where such tools are run. In addition, using the CI/CD pipeline removes any possible personal preference-based comments from developers — if the CI/CD passes then the coding standard was followed.

The same applies to running tests. Tests may be passing locally and may make developers assert that the work is completed, but if they do not pass the CI/CD pipeline, it means that something was overlooked and will not be delivered to production. Having tests pass in the CI/CD pipeline guarantees the stability of delivered changes.

Deployment

The deployment phase is usually quite straightforward and usually relies on calling a third-party API endpoint to trigger the deployment, or pushing codebase artefact to another repository. It’s also usually filtered by the code branch — deployments may apply only for production branches and tags.

But there may also be more advanced cases where deployment steps start other CI/CD pipelines from neighbouring projects and pass artefacts to them.

The challenge

Salsa has identified several critical challenges for CI/CD pipeline implementation:

Which CI/CD product or service to choose? The product or service should be stable and reliable, incur low running costs and be future-proof.

How can you ease maintenance and lower initial per-project setup time? Not every project has the budget for CI/CD pipeline setup. We needed a solution that could be generic enough to be replicated between projects without too much per-project change, but also flexible enough if change was required.

How to minimise build time? We needed to make sure that builds are running as fast as possible and do not block each other.

Which checks to run and which tools to use? Although this is tied to specific project technology, knowing which tools to use and which should be avoided decreases setup costs.

The solution

Choosing the CI provider

Salsa reviewed multiple options, including TravisCI, CircleCI, ProboCI and Jenkins, before deciding to go with CircleCI due to feature-richness and future-proof container technology. Other criteria were job run parallelisation, caching and zero-maintenance — as a cloud service CircleCI handles all infrastructure and allows developers to perform build configuration using a single configuration file, while allowing access builds through user-friendly UI, integrated with GitHub for authentication and access control.

Jobs and tools

With a distributed development team, it was crucial to identify the types of jobs that needed to be run in the CI/CD pipeline to minimise feedback time from the build. We classified the jobs into:

- Environment provisioning

- Code standards checks

- Unit testing

- Integration testing

- Deployments

At first, the environment provisioning was coded directly in the configuration file, making it hosting-independent and flexible, but at the same time not completely identical with production. During the next iteration of the CI/CD pipeline, we switched to using Docker containers, making CI configuration very small and generic, which made maintenance between projects very easy.

Drupal 8 was chosen as a product to deliver required functionality. It’s a mature open-source product with well-defined coding and security standards in place, plus lots of contributed modules and development tools, including testing frameworks, already available for use in any Drupal-based project. All this made it easier to choose the tools that would run in the CI/CD pipeline.

Reducing build times

With more and more features added to the project, the build time was increasing due to the growing number of newly added unit and integration tests. In turn, more new features were waiting for other features’ builds to be finished, constantly blocking developers.

The solution was to split tests in groups and run parallel builds at the same time. CircleCI supported parallelisation not only within the whole pipeline but also for specific jobs, out-of-the-box. This meant that we could have a single build running fast, non-parallel jobs up to the slow test jobs, which then “fanned-out” into multiple parallel jobs, gradually decreasing the time of the build.

The end result

The DPC project is still using setup CI/CD pipeline that doesn’t require any maintenance. New features are constantly added to existing projects with the assurance that changes are safe to deploy to production. New projects have a CI/CD pipeline setup in hours rather than days or weeks. The team continues to build high-quality code to contribute into stable and tested product.

CircleCI has (again) proven to be a flexible and reliable third-party service.